I can’t lie. It’s been a while since I last dived seriously into server log file analysis.

When I was carrying it out somewhat frequently, did I find anything critical to the site’s health that I probably wouldn’t have discovered otherwise? On occasion, but honestly, not as often as you might think.

There were some incredible insights, sure, but often not critical insights that felt like they unlocked a site’s full potential, the silver bullet that it can often feel like it should be with the curtain being pulled back as to how search engine bots are navigating your site.

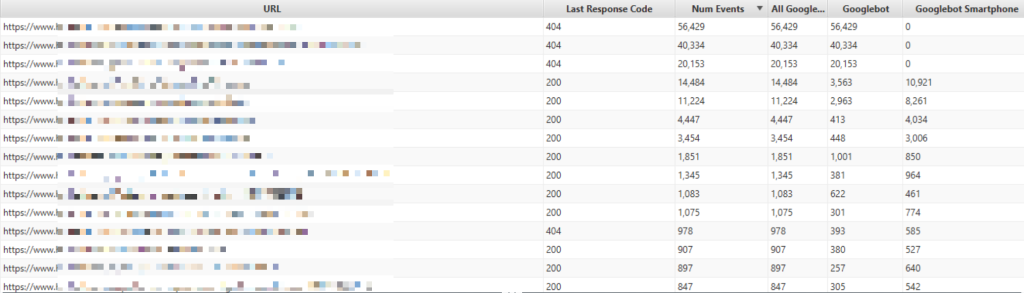

For example, on one of my previous forays into log file analysis, I was able to unearth a bizarre pattern of three 404 pages, resulting in the highest number of bot events. These pages didn’t show up during a crawl of Screaming Frog, nor did they have any external backlinks.

A real headscratcher.

The thing is, I still didn’t quite get to the bottom of what was causing this particular issue (even after consulting with a number of other leading SEOs who are far smarter than myself), and there was greater SEO breakthroughs with other initiatives not related to this data which didn’t take up the time or resource that log file analysis can.

Of course, seeing the pages on your website sorted by the number of visits from Googlebot can undoubtedly be fascinating. Helping to identify issues around internal linking, site structure failings, and other navigational problems that aren’t picked up as quickly or easily by other tools remains a huge advantage of this approach.

But, log file analysis can lead to challenges of its own. Particularly around client buy-in (if you work within an agency). As SEOs in this position often, our point of contact will be a broadly skilled marketer. As a result, their familiarity with server logs is probably quite low. If you’re lucky, you will also likely have communications with a developer resource. Even if that’s the case, their eagerness to share the logs with you might not be as high as your enthusiasm to get the analysis underway.

An Alternative to Traditional Log File Analysis for SEOs?

So, is there an alternative? Maybe.

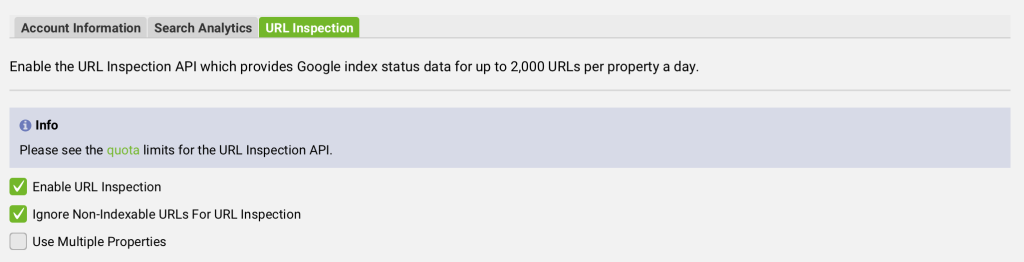

The approach I’m going to jump into here is using Google Search Console API and specifically the URL Inspection > Enable URL Inspection feature.

This provides data directly from Google Search Console as if inspecting a URL directly within the tool itself. So, as you can imagine, being able to do this across a website for 100s of URLs at once saves plenty of time.

When the crawl is complete, we get a heap of data. But there’s a couple of columns in particular that I choose to focus on, primarily:

- Days since last crawl

- Last Crawl

The above basically tell you the same thing but I’ll circle back to as to see I document both a little later (its just to double check I have the correct data).

Secondary data:

- Organic Clicks (time period of your choice)

- Ahrefs backlink data

- Unique inlinks – Note: Would only advise if you are crawling a whole site as won’t be accurate if you are only using list mode, you also need to work a bit of magic to remove nav/head links in order to concerate on those in the body copy.

The title ‘secondary data’ is a bit misleading as this stuff is just, if not more important for drawing insights and plotting next steps.

Claude’s Time to Shine (Again)

Using an export of this data, we can then head over to Claude and let it work its magic. Here is an example of the initial prompt I used:

I am carrying out an analysis of a website using Screaming Frog. I have connected Google Search Console API to get an idea of how often individual pages of the website are being crawled by Googlebot and and if pages aren’t being crawled frequently, if there is any correlation between other metrics such as the number of clicks that those pages are getting or the number of internal or external links they have. Help me analyze the data in the csv file uploaded.

Again, as in my previous post – prompt skills needed some improvement

Notes for self:

- How can we improve the prompt to help with these insights?

- Are there correlations that can be pulled from pages that are crawled less frequently? Can we feed Cluade more data from the wider Screaming Frog crawl to help here.

But with this prompt or something similar, you should be able to get something back like the following:

Breaking Down the Data And Importantly Drawing Actionable Insights

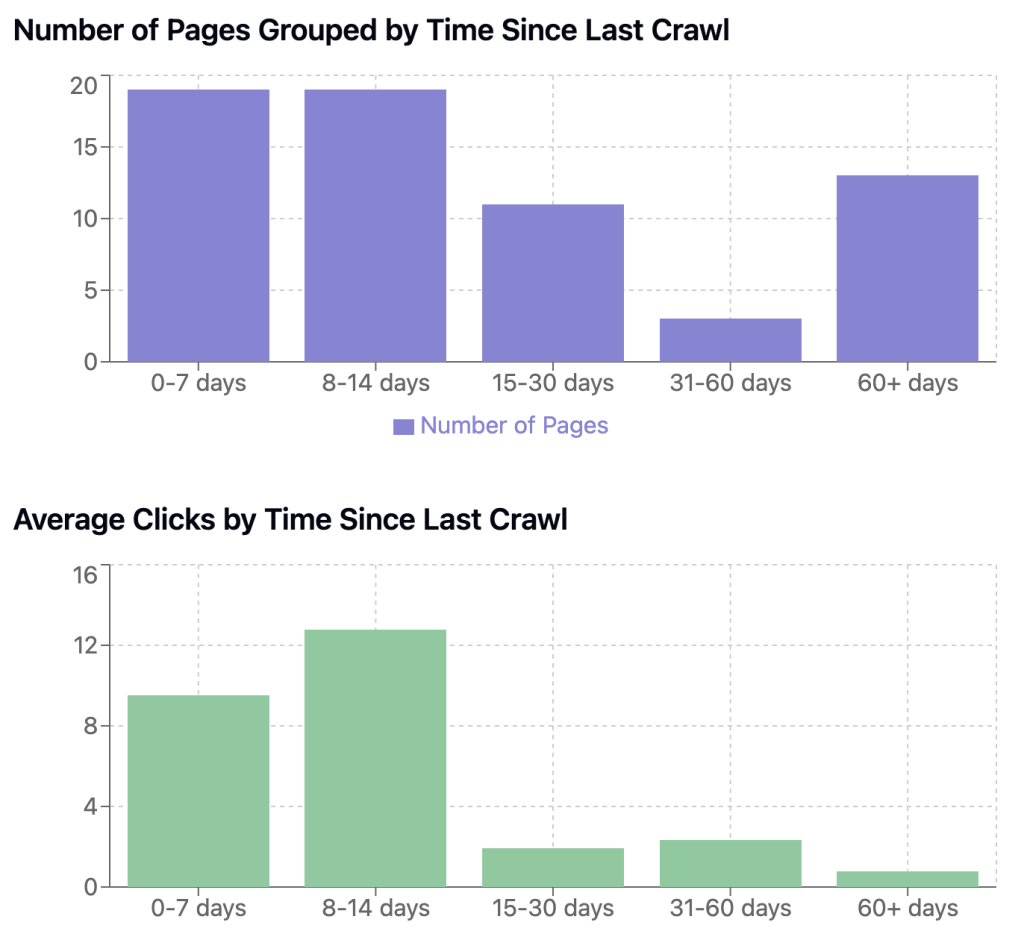

Using the real example I generated above, lets explore some of the initial questions that likely come from this process and the charts generated:

So what, what does the data actually tell us?

What can we make as a next step?

So there are lots of different directions you can go in as a next step here; as a note, for this process, I was trying to look at data relating to when each new piece of content I had helped to create within the last 6 months was last crawled, which is the charted generated above.

Continuing to use the above example, from the very early data, there is a drop off in the average number of clicks when a page was crawled more than 15+ days ago.

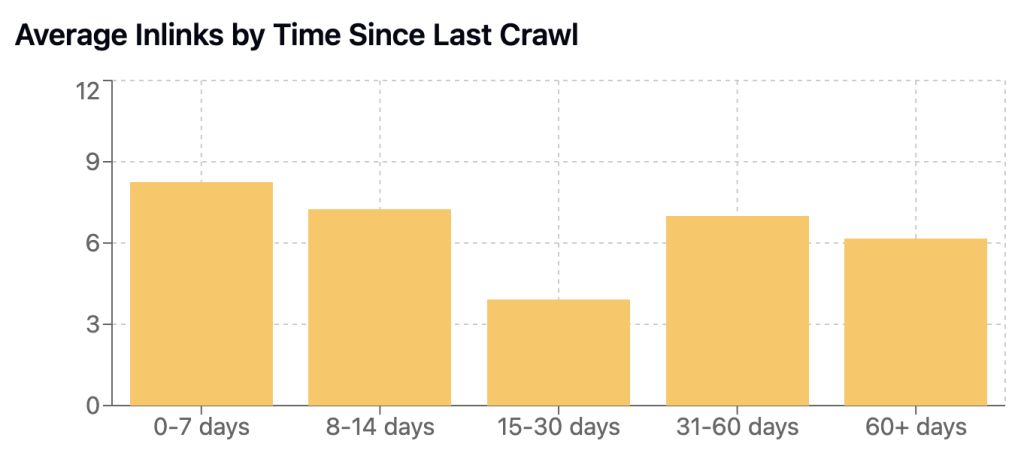

Interestingly, there wasn’t a clear correlation between the average number of internal links and time since the last crawl.

So it doesn’t look to be a case of building more internal links to the less frequently crawled pages but maybe also where we are creating these internal links from (for example, pages that have already picked up more traction/visibility organically and as a result are potentially being crawled more frequently as well).

For this example, I unfortunately didn’t connect the Ahrefs API, so no backlink data was available. However, that will definitely be my next step.

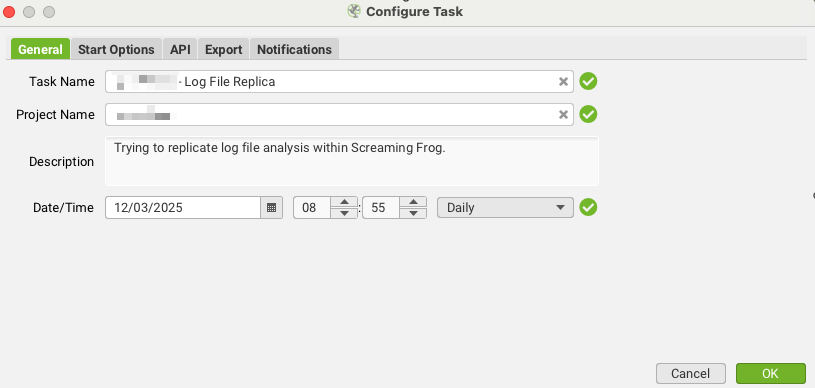

As will setting up scheduled crawls of a select number of pages and crawling every day over a set period, likely 5-10 days. With this I’ll be able to see just how often each page is getting crawled across this period. Something not too dissimilar to what server logs would tell us.

With these automated crawls, my next step will be to create a Google sheet where individual crawls can be uploaded to. The sheet will contain an Overview tab showing the data for each page and its crawl frequency based on these daily crawls. Hopefully this will be in a position to share by the next time I sit down to blog!

Leveraging the GSC API will also serve as an ‘indexed checker’ to ensure the site’s key pages are indexed, and if not for any reason on a particular day, flag this issue quickly.

Until next time.

GSC API x AI Insight

For full transparnecy, here is Claude’s full reply to the prompt shared above:

Crawl Frequency Overview

All 65 pages in your data are part of the glossary section. The crawl frequency varies significantly:

- Very Recent (0-7 days): 19 pages (29.2%)

- Recent (8-14 days): 19 pages (29.2%)

- Moderate (15-30 days): 11 pages (16.9%)

- Old (31-60 days): 3 pages (4.6%)

- Very Old (60+ days): 13 pages (20.0%)

The average time since last crawl is 27 days, with some pages not crawled for as long as 130 days.

Correlations with Crawl Frequency

I found meaningful correlations between crawl frequency and other metrics:

- Clicks vs Crawl Frequency: Moderate negative correlation (-0.26)

- Pages crawled more recently tend to have more clicks

- Recently crawled pages (0-14 days) average 9-13 clicks

- Older pages (60+ days) average less than 1 click

- Impressions vs Crawl Frequency: Stronger negative correlation (-0.41)

- Recently crawled pages average 4,750-5,665 impressions

- Older pages (60+ days) average only 627 impressions

- Internal Links vs Crawl Frequency: Weak negative correlation (-0.09)

- The relationship between internal links and crawl frequency is less clear

- Recently crawled pages (0-7 days) average 8.26 internal links

- Very old pages (60+ days) average 6.15 internal links

Least Frequently Crawled Pages

These pages have gone the longest without being crawled:

/page-1/– 130 days, 1 click, 9 internal links/page-2/– 118 days, 0 clicks, 2 internal links/page-3/– 102 days, 0 clicks, 6 internal links/page-4/– 89 days, 0 clicks, 3 internal links/page-5/– 86 days, 1 click, 8 internal links

Most Frequently Crawled Pages

These pages were crawled most recently:

/page-1/– 0 days, 6 clicks, 1 internal link/page-2– 2 days, 8 clicks, 13 internal links/page-3/– 3 days, 7 clicks, 3 internal links/page-4/– 3 days, 3 clicks, 1 internal link/page-5/– 4 days, 15 clicks, 3 internal links

Key Insights

- User Engagement Drives Crawl Frequency: Pages with more clicks and impressions are crawled more frequently. Google appears to prioritize crawling content that users engage with.

- Internal Links Have Less Impact: The correlation between internal links and crawl frequency is weaker than expected. Some frequently crawled pages have few internal links, while some rarely crawled pages have many.

- A Significant Portion of Pages are Under-crawled: 20% of your glossary pages haven’t been crawled in over 60 days, potentially affecting their search visibility.

Recommendations

- Focus on User Engagement: Create more engaging content to drive clicks and impressions, which appears to significantly influence crawl frequency.

- Internal Linking Structure: While the correlation is weak, consider reviewing your internal linking strategy. Ensure important but rarely crawled pages receive more internal links from high-traffic pages.

- Content Updates: Consider updating older, less frequently crawled pages to encourage Google to revisit them.

- Submit URL for Indexing: For critical pages that haven’t been crawled in a long time, use Google Search Console’s “Request Indexing” feature.

Leave a Reply